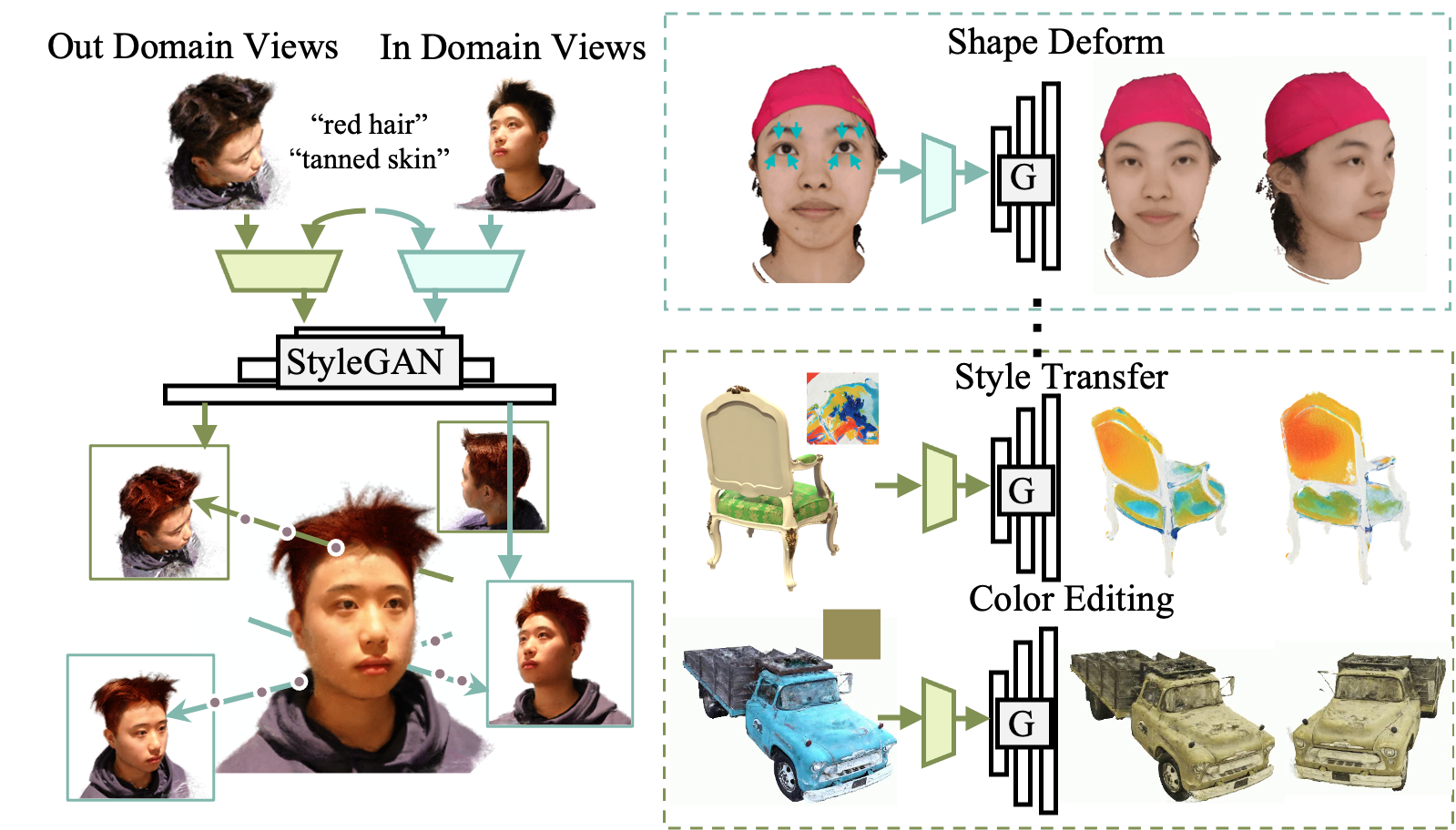

We present NeRFEditor, an efficient learning framework for 3D scene editing, which takes a video captured over 360◦ as input and outputs a high-quality, identity-preserving stylized 3D scene. Our method supports diverse types of editing such as guided by reference images, text prompts, and user interactions.

We achieve this by encouraging a pre-trained StyleGAN model and a NeRF model to learn from each other mutually. Specifically, we use a NeRF model to generate numerous image-angle pairs to train an adjustor, which can adjust the StyleGAN latent code to generate high-fidelity stylized images for any given angle. To extrapolate editing to GAN out-of-domain views, we devise another module that is trained in a self-supervised learning manner. This module maps novel-view images to the hidden space of StyleGAN that allows StyleGAN to generate stylized images on novel views. These two modules together produce guided images in 360◦ views to finetune a NeRF to make stylization effects, where a stable fine-tuning strategy is proposed to achieve this.

Experiments show that NeRFEditor outperforms prior work on benchmark and real-world scenes with better editability, fidelity, and identity preservation.

@article{sun2022nerfeditor,

title={NeRFEditor: Differentiable Style Decomposition for Full 3D Scene Editing},

author={Sun, Chunyi and Liu, Yanbing and Han, Junlin and Gould, Stephen},

journal={arXiv preprint arXiv:2212.03848},

year={2022}

}